Building an Image Processing Service in Go

Over the course of the last few months, I’ve ported ImgKit, my image processing service, from Ruby to Go. This was my first project in Go and I wanted to document the technical choices I made and how the whole process went.

In this post

What’s ImgKit exactly?

ImgKit has two main components:

- REST API. Send a POST request with an image URL and ImgKit will download the file and extract various information from it (EXIF metadata, dimensions, Computer Vision annotations, color palette).

- Image server. ImgKit can serve dynamic thumbnails and screenshots (e.g. for social media cards), proxy external images — with built-in cache and CDN.

I find myself building these type of features over and over again and I wanted to solve it with a universal service can be plugged in to any website1.

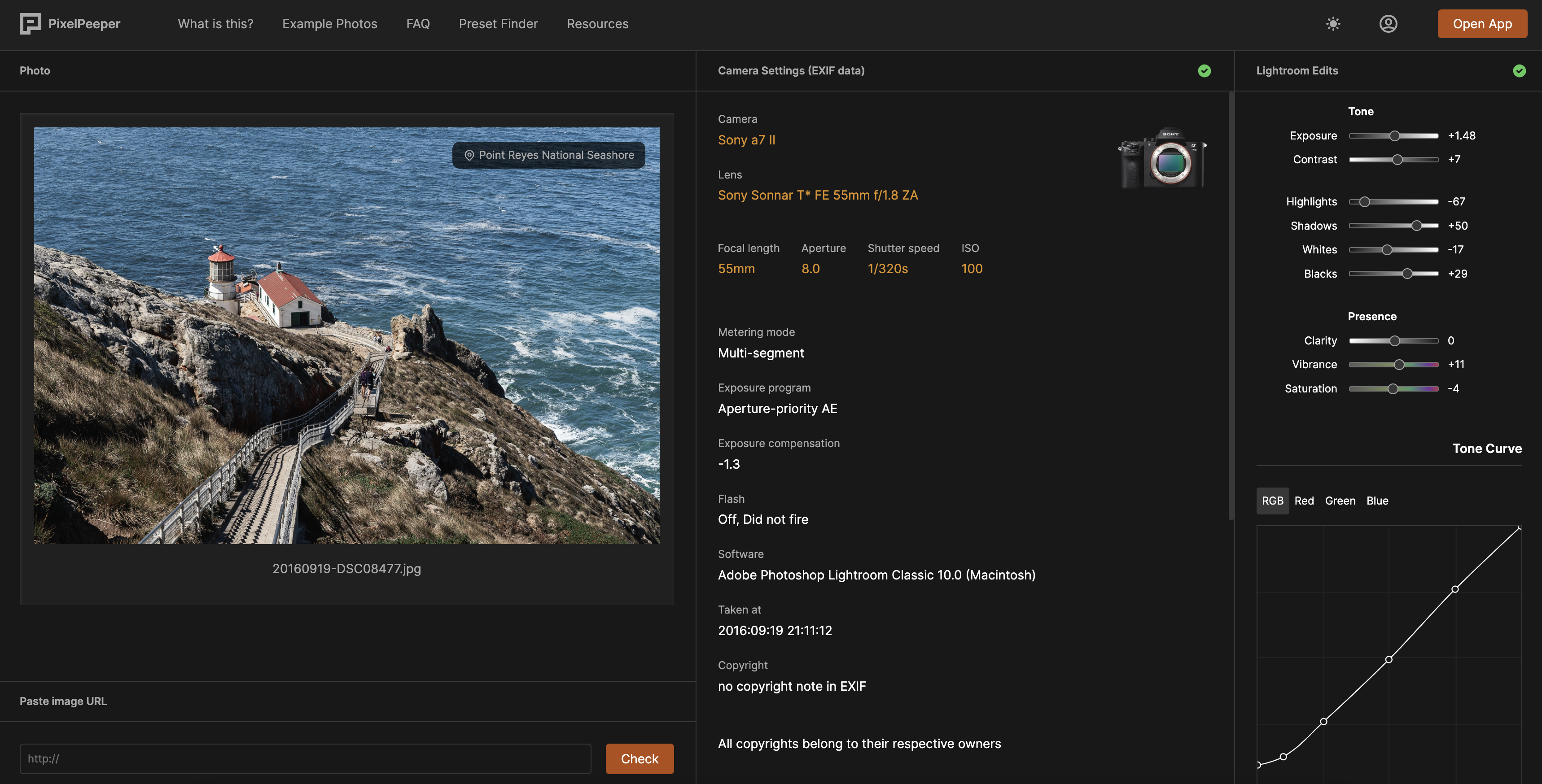

But the main use case was PixelPeeper — my mini web app for photographers. PixelPeeper pulls camera information (EXIF) and Lightroom edits (XMP) from JPG files and turns them into downloadable Lightroom presets. It can also detect landmarks, extract color palette and a few other things. ImgKit makes it all possible2. Here’s how it looks.

Why Go?

ImgKit was originally written in Ruby. Ruby may not be the first language that comes to mind when you think of efficient file processing, but it allowed me to ship the first version in a matter of days. At the time, ImgKit only needed to process a few hundred requests a day (at most) and I figured Ruby can handle it. However, after a few months, as the load increased, the app required more of my attention. It was leaking memory, needed regular restarts and more resources, while still being slow. Instead of trying to optimize the code, I decided to rewrite the entire app, using the opportunity to learn something new.

Go seemed to check all the boxes for this type of project: fast, compiled, static types, memory-efficient (compared to Ruby), old enough to have a rich ecosystem and googlable answers3.

After the switch to Go, ImgKit uses less than 1GB of RAM (the Ruby version needed 16GB to function properly) under the same load and doesn’t need any regular attention (so far).

Technical details

ImgKit currently consists of two binaries: api (serving HTTP requests) and worker (background tasks). I’m using Gin, Postgres database (with pgx and goose), ksuid for random global IDs, libvips for image processing, zerolog for logs, Sentry for error reporting.

Image processing API

The main part of the REST API is the /images endpoint:

curl -X POST http://pixelpeeper.imgkit.io/api/images \

-H "Content-Type: application/json" \

-H "Authorization: Bearer <API_KEY>" \

--data '{

"url": "https://pixelpeeper.s3.amazonaws.com/example-photos/20160910-DSC06091.jpg",

"passthrough": { "id_passed_back_to_the_client": 123 },

"exif": true

}' | jq

This request will trigger an asynchronous task that downloads the image, extracts metadata (dimensions, MIME type, EXIF), calculates the checksum and phash/dhash (for deduplication). Once the image is processed, ImgKit sends a webhook, so the client can fetch the information.

The result may look like this:

{

"id": "img_2Ifubn3mGqAfzZulPcXrsPoyagM",

"created_at": "2022-12-09T12:23:52.933462Z",

"updated_at": "2022-12-09T12:23:55.902671Z",

"status": "completed",

"url": "https://pixelpeeper.s3.amazonaws.com/example-photos/20160910-DSC06091.jpg",

"final_url": "https://pixelpeeper.s3.amazonaws.com/example-photos/20160910-DSC06091.jpg",

"filename": "20160910-DSC06091.jpg",

"content_type": "image/jpeg",

"checksum": "d55948204b22994a6f27b463d860bd1b71936e0f9bca8362787500c80a0a523c",

"byte_size": 844339,

"width": 1500,

"height": 1000,

"exif": {

"ISO": 100,

"Make": "SONY",

"Flash": "Off, Did not fire",

"Model": "ILCE-7M2",

"FNumber": 10,

"Contrast": "Low",

"LensInfo": "35mm f/2.8",

"Software": "Adobe Photoshop Lightroom Classic 10.0 (Macintosh)",

"LensModel": "FE 35mm F2.8 ZA",

"SceneType": "Directly photographed",

"Sharpness": "Normal",

"ColorSpace": "sRGB",

"CreateDate": "2016:09:10 18:31:59",

"FileSource": "Digital Camera",

"ModifyDate": "2020:11:21 13:25:33",

"OffsetTime": "+01:00",

"Saturation": "Normal",

"Compression": "JPEG (old-style)",

"ExifVersion": "0231",

"FocalLength": "35.0 mm",

"LightSource": "Unknown",

"XResolution": 240,

"YResolution": 240,

"ExposureMode": "Auto",

"ExposureTime": "1/250",

"MeteringMode": "Multi-segment",

"WhiteBalance": "Auto",

"ApertureValue": 10,

"CustomRendered": "Normal",

"ResolutionUnit": "inches",

"ThumbnailImage": "(Binary data 9456 bytes, use -b option to extract)",

"BrightnessValue": 10.7671875,

"ExposureProgram": "Aperture-priority AE",

"SensitivityType": "Recommended Exposure Index",

"ThumbnailLength": 9456,

"ThumbnailOffset": 942,

"DateTimeOriginal": "2016:09:10 18:31:59",

"DigitalZoomRatio": 1,

"MaxApertureValue": 2.8,

"SceneCaptureType": "Standard",

"ShutterSpeedValue": "1/250",

"ExposureCompensation": 0,

"FocalPlaneXResolution": 1675.257385,

"FocalPlaneYResolution": 1675.257385,

"FocalLengthIn35mmFormat": "35 mm",

"FocalPlaneResolutionUnit": "cm",

"RecommendedExposureIndex": 100

}

}

AI annotations (Computer Vision)

I wanted to have some sort of ML/AI features for automated labels, text extraction (OCR), adult content detection, landmark detection. I’m currently using Google Cloud Vision API to achieve that, but there’s an option to plug in other models/APIs in the future.

Annotations require a separate request (this may change in the future):

curl -X POST http://pixelpeeper.imgkit.io/api/images/img_2Ifubn3mGqAfzZulPcXrsPoyagM/annotations \

-H "Content-Type: application/json" \

-H "Authorization: Bearer <API_KEY>" \

--data '{

"annotations": ["labels", "landmarks", "properties", "text", "web_entities"],

"passthrough": { "id_passed_back_to_the_client": 123 }

}' | jq

Thumbnails

I wanted to be able to serve different variants of an image, without the need to pre-generate and store them. ImgKit allows me to create thumbnails on the fly and the results are cached using a combination of nginx and CloudFlare:

https://pixelpeeper.imgkit.io/transform/images/example-photos/20160910-DSC06091.jpg?h=600&s=50e05be64737c793d6938440f4ddd2d1b8e827e0&w=600

The "s" parameter is used to prevent users from tampering with the URL and generating multiple variants of the same image. The signature is a HMAC digest of the URL, signed with a private API key. This ensures that the URL is authentic and has not been modified.

Thumbnails are processed using the libvips library.

Screenshots

I wanted to have an option to generate dynamic social card images (Open Graph for Facebook, Twitter cards, Pinterest etc.) and the easiest way to achieve that is to serve a special HTML page and take a screenshot of it using Puppeteer (this service is not exposed to the public).

This is how a /screenshot URL looks:

https://pixelpeeper.imgkit.io/screenshot?filename=x6zmxhkj&height=630&preferDark=true&s=0438999a1a7c22a4675e26d2a836696cc4d56c7b&scaleFactor=1&type=jpeg&url=https%3A%2F%2Fpixelpeeper.com%2Fsocial%2Fx6zmxhkj%2Ffacebook%3Fv%3D2&width=1200

When the Go app receives this request, it checks the signature (to prevent tampering with URLs) and forwards the request to the actual screenshot service (a Node.js app running Puppeteer). Puppeteer returns the image and the Go app streams the response back to the user.

Cache and CDN

Thumbnails and screenshots can take a few seconds to process, so I want them to be generated once and then served from cache.

The Go app doesn’t concern itself with caching (apart from sending the Cache-Control header). The actual caching is done by nginx, which stores results in the file system and serves them (through CloudFlare) to the user. CloudFlare provides an additional layer of caching and CDN.

There’s also another type of caching: every image is downloaded once from the source (e.g. S3) and stored locally for subsequent requests. That way, the original image doesn’t have to be re-downloaded for each thumbnail variant. There’s room for improvement here, but as long as the app runs on a single machine, it will work well.

Detecting duplicates

This is the part I’m still working on: I want to be able to detect duplicated (but not exactly identical) images. Currently ImgKit calculates pHash and dHash for each image and stores the results in the database.

The actual finding of duplicates/similar images is tricky to implement in a generic manner. Cloudinary leaves this part to the client and I’m currently leaning towards the same approach.

The reason is that, with pHash, you have to calculate the Hamming distance of two images. In a more common scenario, you’ll have to compare the pHash of a new image with every image you already have in the database, calculating the Hamming distance for each pair. This is highly inefficient and time-consuming, so you’ll always want to limit the number of images to compare against.

I want to explore the option to store hashes in a VP-Tree for quick lookup, but I haven’t had the time to dive into it yet.

Background worker

The background worker is currently used to: process images, fetch Cloud Vision annotations and post webhooks. It handles retries and exponential backoff for failed tasks.

Compared to Ruby, Go makes it quite easy to implement: my background worker is only ~150 lines of code — without any third-party library. It runs as a separate process that polls a dedicated database table for new tasks every N seconds. If it finds something to work on, it runs the job in a goroutine (limiting the number of concurrent jobs).

Deployment

The deployment part can still be improved. Currently, the Go processes run on a single VPS server. There’s no Kubernetes or any other fancy process manager (apart from systemd), which means that every deployment results in a short downtime while the API process is being restarted. Currently the restart takes less than 1s and the load on the server is small enough that I’ve accepted this trade-off. If I ever have to scale it, I’ll set up a Kubernetes cluster and the downtime problem will be gone4.

As for the build part, I’m using GitHub Actions. Each push to the main branch triggers a workflow that installs the dependencies, runs tests, builds the binaries, copies them to the VPS and runs systemctl restart imgkit-worker && systemctl restart imgkit-api. Quite simple and it works fine (for the current state of the app).

Go: my first impressions

This was my first Go project, so I thought I’d share my first impressions of the language. This is coming from someone who has spent the last few years working mostly with Ruby and TypeScript.

Ruby and (especially) Rails have great documentation. Go… not so much. There’s the amazing Go by Example, but the official docs aren’t very detailed, they lack examples and I’ve found myself having to read the source of Go and third-party libraries quite regularly.

I spent way too much time making trivial decisions that Rails makes for me: where to put this part of the code? should this function go into this package? should this be a function or a struct method? how do I organize the database models? should they be in a separate package? etc., etc. Thankfully, I had some help with these questions5.

Compared to TypeScript, the type system is refreshingly simple. As much as I appreciate TS, it can be way too verbose and after a while it’s easy to end up with too many types (unions, generics) that clutter the code with unreadable series of type annotations, the sole purpose of which is to make TypeScript stop complaining. Maybe my project is still too small, but so far I’ve never felt this way about Go types: they’ve only been helpful, without the impression of obscuring the code.

Compared to Ruby, Go makes some difficult things easy (e.g. concurrency) and some easy things difficult (string/array/slice operations). The standard library is quite low-level I found it surprising that it lacks some of the more common functions. I’ve found myself writing lots of helper code for basic operations (finding an element in a slice, checking if a file exists, calculating sha1, handling nullable strings etc.).

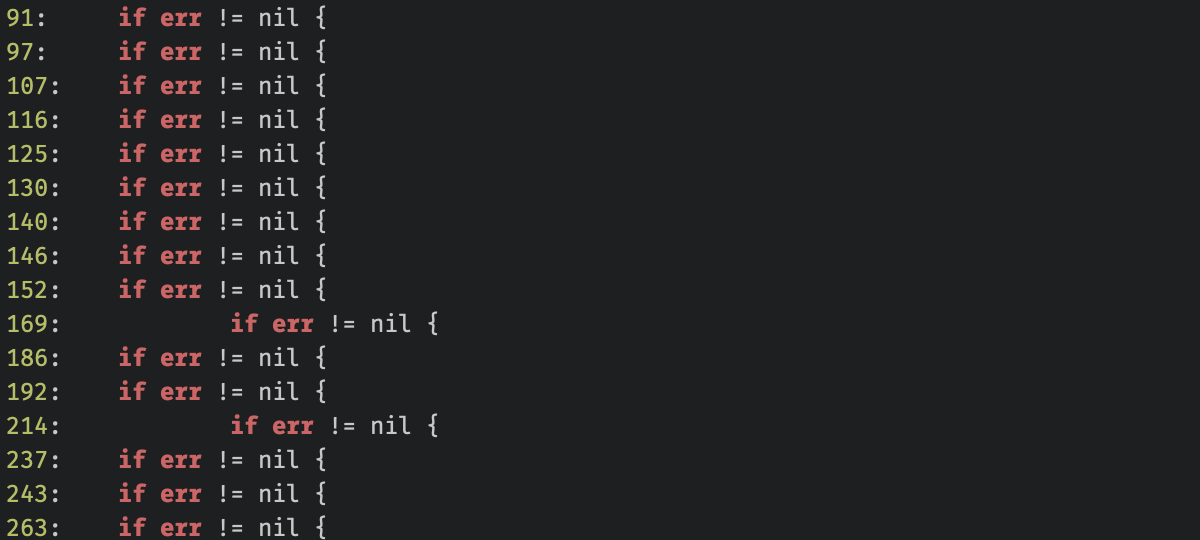

Error handling… At first, it seemed like the explicit built-in syntax for error handling would make it easy to work with. Turns out, the specifics and conventions around error handling are still unclear and it seems like everyone has their own version of how error handling should look like. Also, having to type yet another if err != nil block every few lines gets tedious quickly.

That said, I’ve found the process of writing Go quite enjoyable and had a lot of fun learning it. It’s no longer a brand new thing, so there’s a lot of great libraries to choose from and StackOverflow answers to cover most of the issues I encountered. The type system is a pleasure to work with and makes refactoring stress-free. Go wouldn’t be my first choice for a web app or business logic, but for this type of project, I’ve found it to be a perfect match. So far, I’m happy with the results.

-

I’m perfectly aware that similar services already exist, but none of them does exactly what I want in a single package. ↩︎

-

The features I described are available for paid users only (via “Paste image URL”). The free version uses local files which (for privacy reasons) aren’t uploaded anywhere and the metadata is extracted in the browser using JavaScript. ↩︎

-

I also considered Rust, but I’ve found Go a lot less overwhelming syntax-wise. Maybe next time. ↩︎

-

Kubernetes will introduce other challenges, e.g. with the caching part. ↩︎

-

Big thanks to Łukasz who patiently answered my noob questions and reviewed my code. ↩︎